Boosting Continuous Control with Consistency Policy

In the 23rd International Conference on Autonomous Agents and Multiagent Systems, AAMAS 2024

Yuhui Chen 1,2 , Haoran Li 1,2 , Dongbin Zhao 1,22 School of Artificial Intelligence, University of Chinese Academy of Sciences

Abstract

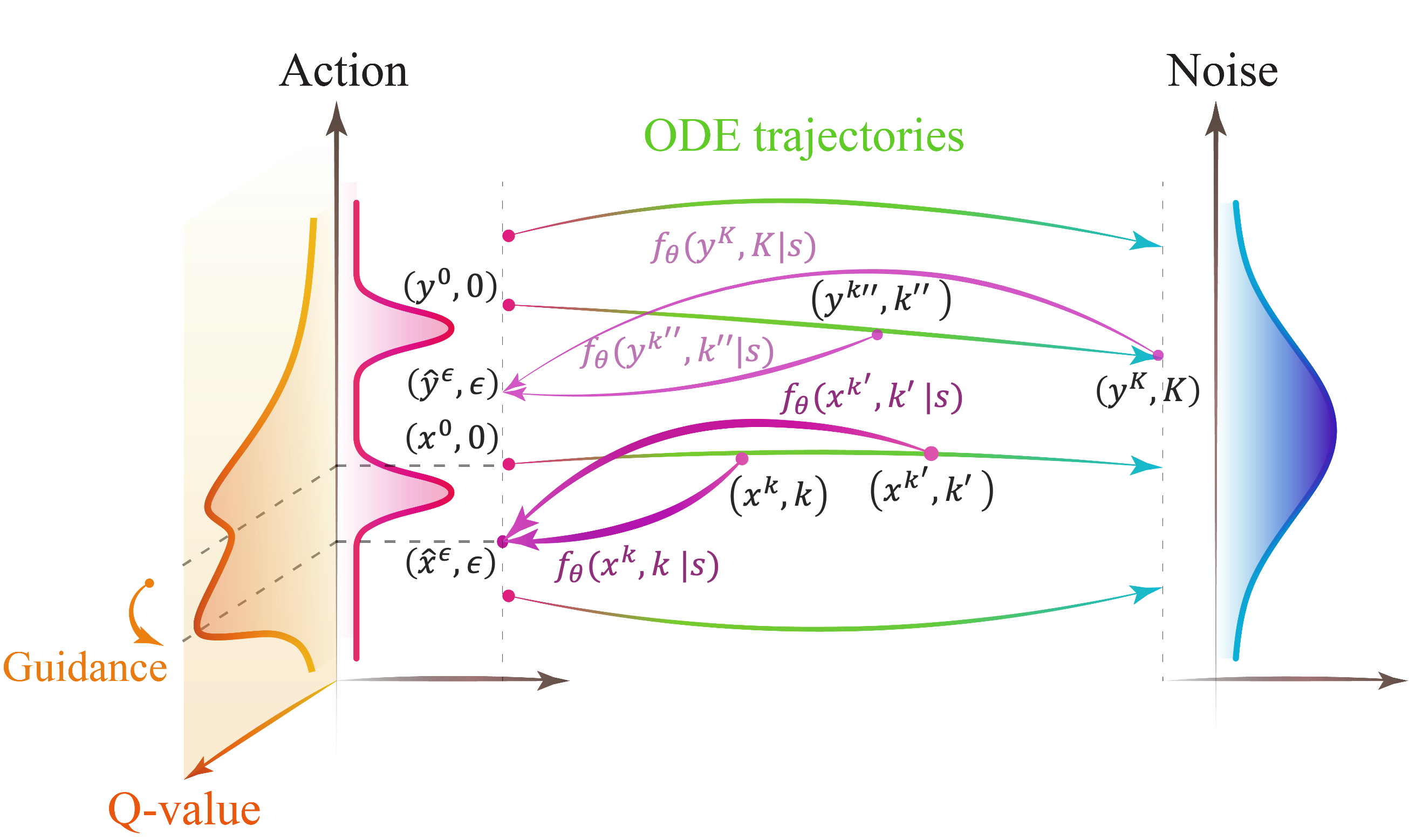

Due to its training stability and strong expression, the diffusion model has attracted considerable attention in offline reinforcement learning. However, several challenges have also come with it: 1) The demand for a large number of diffusion steps makes the diffusion-model-based methods time inefficient and limits their applications in real-time control; 2) How to achieve policy improvement with accurate guidance for diffusion model-based policy is still an open problem. Inspired by the consistency model, we propose a novel time-efficiency method named Consistency Policy with Q-Learning (CPQL), which derives action from noise by a single step. By establishing a mapping from the reverse diffusion trajectories to the desired policy, we simultaneously address the issues of time efficiency and inaccurate guidance when updating diffusion model-based policy with the learned Q-function. We demonstrate that CPQL can achieve policy improvement with accurate guidance for offline reinforcement learning, and can be seamlessly extended for online RL tasks. Experimental results indicate that CPQL achieves new state-of-the-art performance on 11 offline and 21 online tasks, significantly improving inference speed by nearly 45 times compared to Diffusion-QL

Overview

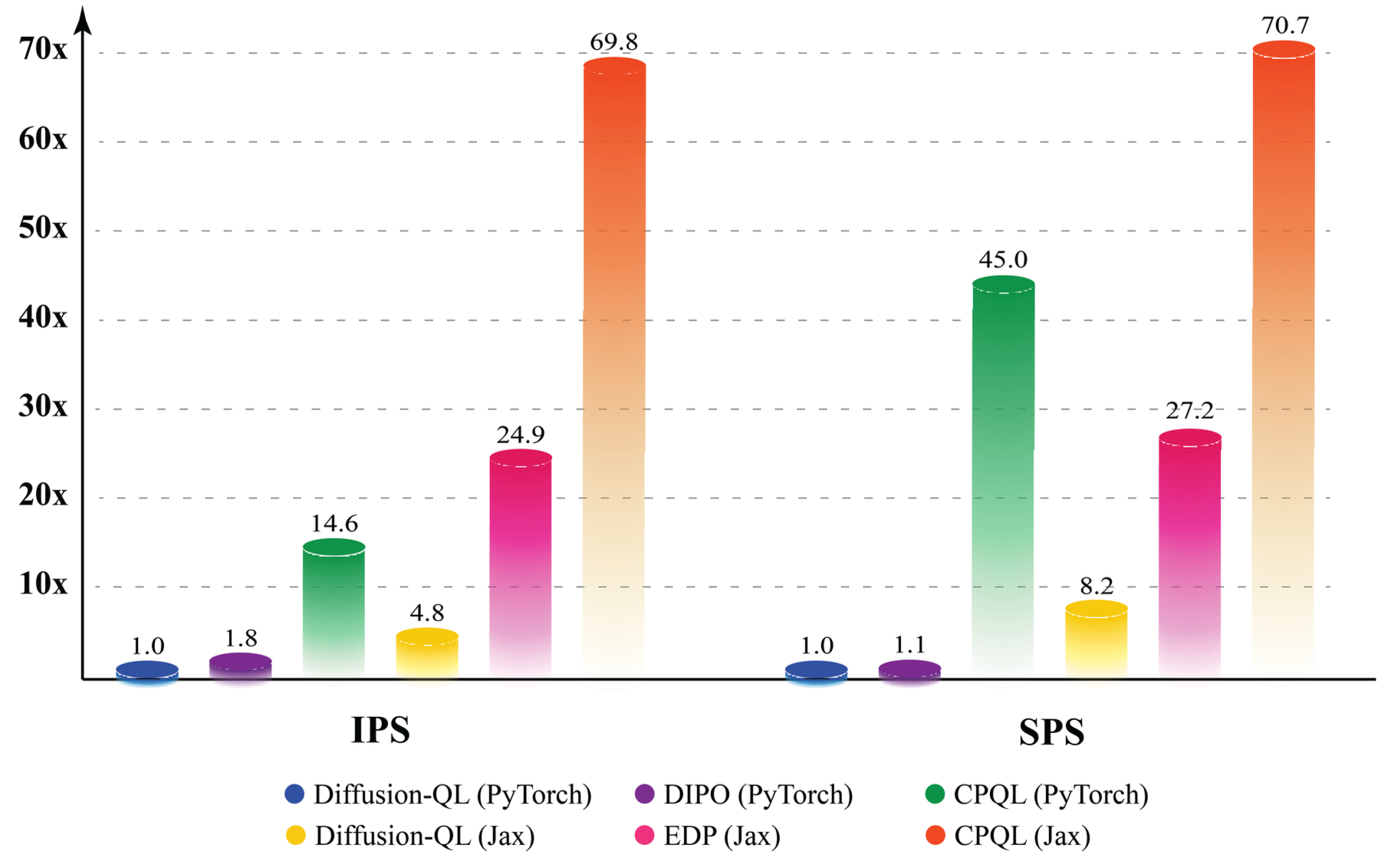

We propose a novel time-efficient method named Consistency Policy with Q-learning (CPQL) and improve training and inference speeds by nearly 15x and 45x compared to Diffusion-QL, while also improving the performance. Also, we conduct a theoretical analysis to demonstrate that the CPQL is capable of achieving policy improvement with accurate guidance and propose an empirical loss to replace consistency loss for stabilizing policy training. Additionally, CPQL can seamlessly extend to online RL tasks. As experimented, CPQL achieves state-of-the-art performance on 11 offline and 21 online tasks.

Experiments

we conduct several experiments on the D4RL benchmark, dm_control tasks, Gym MuJoCo tasks to evaluate the performance and time efficiency of the consistency policy.

Performance for offline tasks

D4RL benchmark

CPQL outperforms other methods on 11 offline tasks from D4RL benchmark, achieving a 4% improvement over Diffusion-QL by using accurate value guidance and ODE trajectory mapping, and demonstrates great policy improvement with strong policy representation ability.

Performance for online tasks

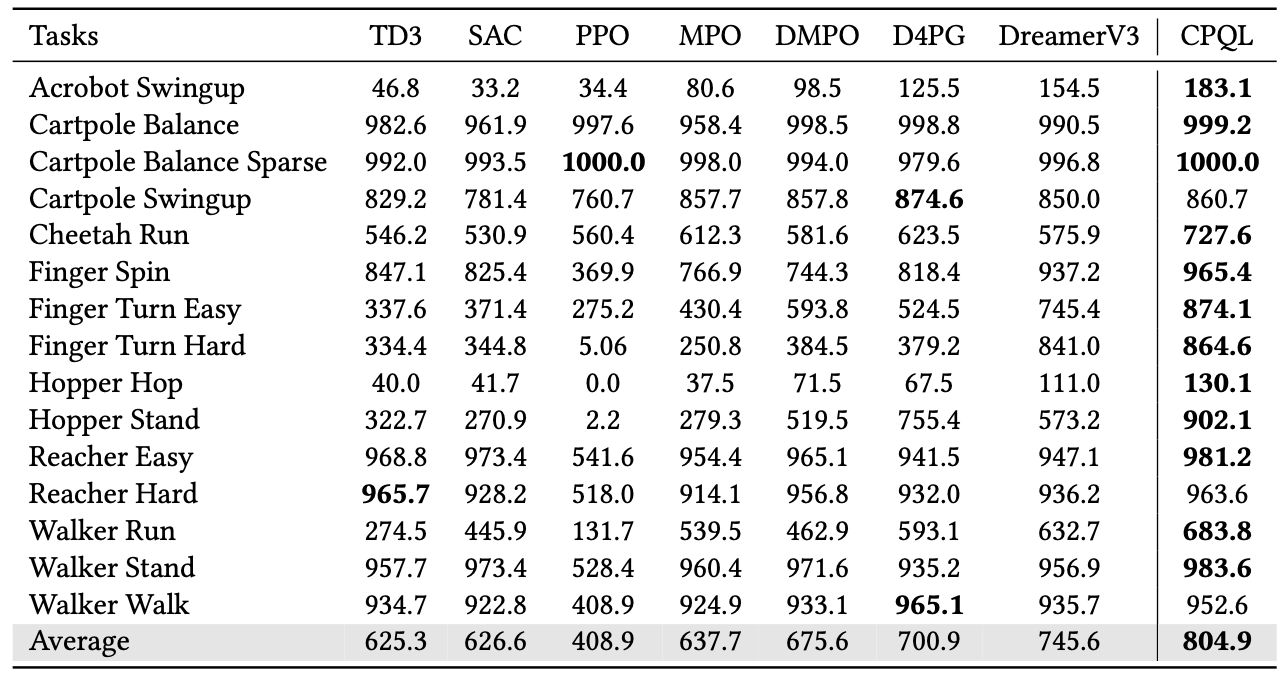

Dm_control benchmark

CPQL outperforms state-of-the-art methods, including DreamerV3, on 15 tasks from dm_control suite, showing an 8% improvement on average within 500K interactions, while effectively handling complex tasks without relying on inaccurate diffusion guidance.

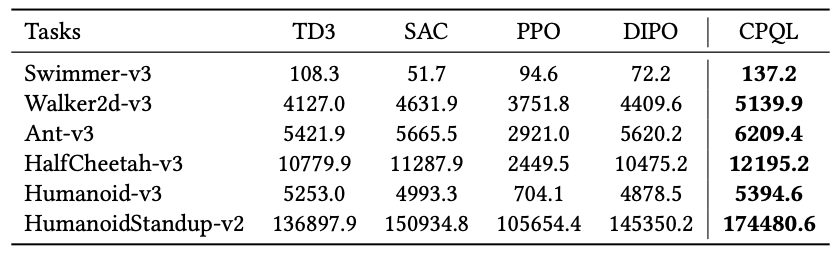

Gym Mujoco benchmark

CPQL outperforms DIPO on 6 Gym MuJoCo tasks, demonstrating significant performance advantages within 1M interactions by maintaining better exploration ability and avoiding issues related to dataset coverage and limited sampling diversity.

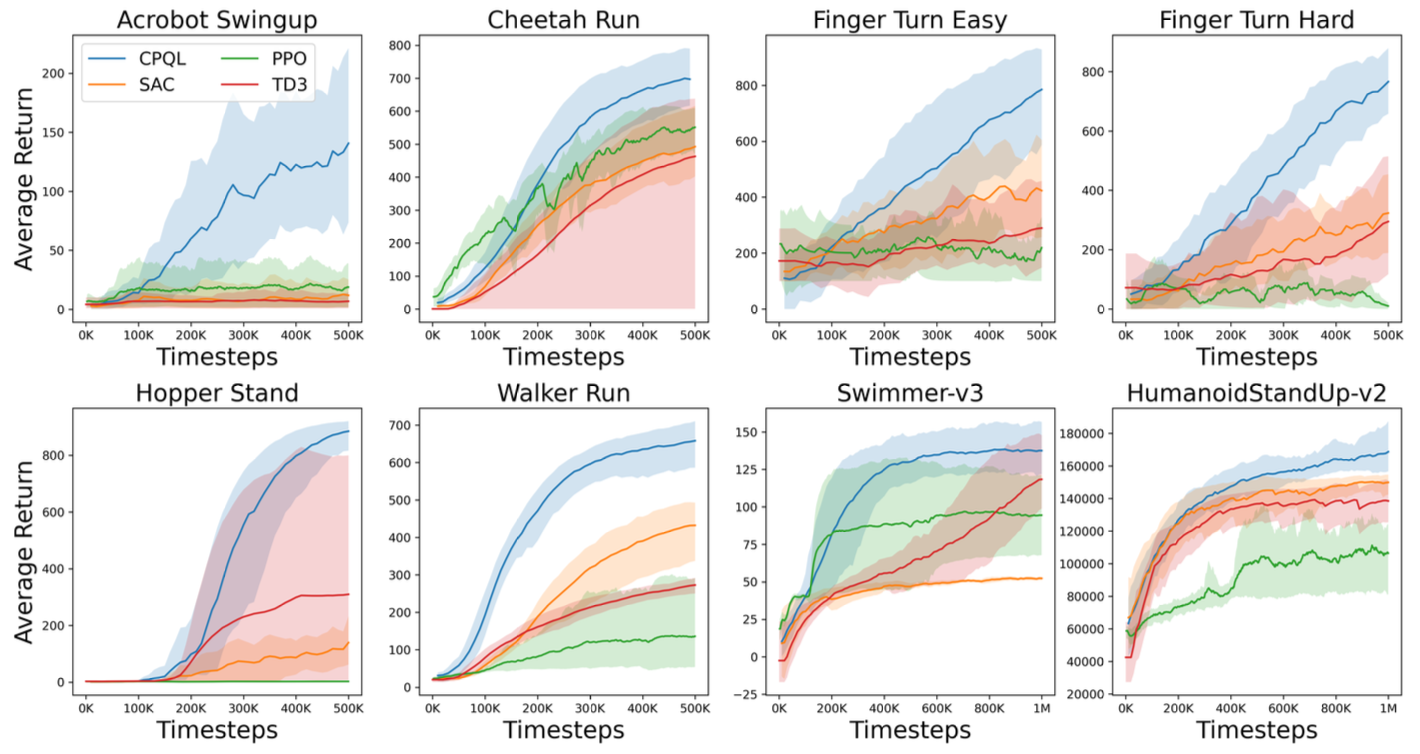

Train Curves for online tasks

CPQL demonstrates superior training stability, sample efficiency, and performance, outperforming other algorithms within 500K (dm_control tasks) and 1M (Gym Mujoco tasks) interactions.

Time Efficiency

CPQL significantly outperforms other diffusion-based methods in terms of time efficiency, achieving up to 15 times faster training speed and 45 times faster inference speed compared to Diffusion-QL, while also surpassing EDP in both training and sampling speeds.

Cite our paper

If you find our research helpful and would like to reference it in your work, please consider using one of the following citations, depending on the format that best suits your needs:

-

For the Arxiv version:

@article{chen2023boosting, title={Boosting Continuous Control with Consistency Policy}, author={Chen, Yuhui and Li, Haoran and Zhao, Dongbin}, journal={arXiv preprint arXiv:2310.06343}, year={2023} } -

Or, for citing our work presented at the AAMAS 2024:

@inproceedings{chen2023boosting, author={Yuhui Chen and Haoran Li and Dongbin Zhao}, title={Boosting Continuous Control with Consistency Policy}, booktitle={Proceedings of the 23rd International Conference on Autonomous Agents and Multiagent Systems, {AAMAS} 2024, Auckland, New Zealand, May 6-10, 2024}, pages={335--344}, publisher={ACM}, doi={10.5555/3635637.3662882}, }

Contact

If you have any questions, please feel free to contact Yuhui Chen.