ConRFT: A Reinforced Fine-tuning Method for VLA Models via Consistency Policy

In the Robotics: Science and Systems XXI, RSS 2025

Yuhui Chen 1,2 , Shuai Tian 1,2 , Shugao Liu 1,2 , Yingting Zhou 1,2 , Haoran Li 1,2 , Dongbin Zhao 1,22 School of Artificial Intelligence, University of Chinese Academy of Sciences

In the Media

Synced (机器之心)

- Our paper was featured in an article by Synced (机器之心), a well-known Chinese AI Technology & Industry Review website.

- 👉 Read the article (in Chinese)

Shenlan AI (深蓝AI)

- Our work was also covered by Shenlan AI (深蓝AI), a leading Chinese AI education and research platform.

- 👉 Read the article (in Chinese)

Introduction Video

Abstract

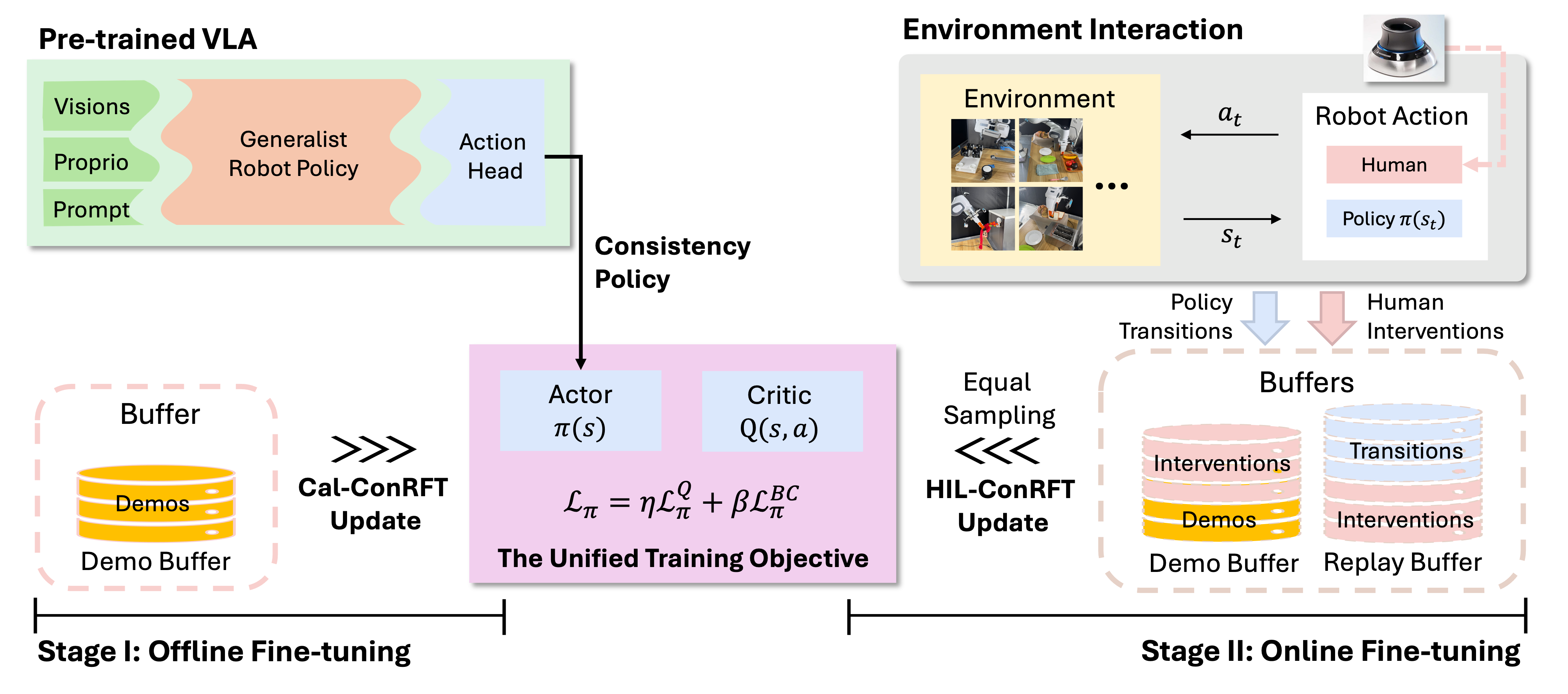

Vision-Language-Action (VLA) models have shown substantial potential in real-world robotic manipulation. However, fine-tuning these models through supervised learning struggles to achieve robust performance due to limited, inconsistent demonstrations, especially in contact-rich environments. In this paper, we propose a reinforced fine-tuning approach for VLA models, named ConRFT, which consists of offline and online fine-tuning with a unified consistency-based training objective, to address these challenges. In the offline stage, our method integrates behavior cloning and Q-learning to effectively extract policy from a small set of demonstrations and stabilize value estimating. In the online stage, the VLA model is further fine-tuned via consistency policy, with human interventions to ensure safe exploration and high sample efficiency. We evaluate our approach on eight diverse real-world manipulation tasks. It achieves an average success rate of 96.3% within 45–90 minutes of online fine-tuning, outperforming prior supervised methods with a 144% improvement in success rate and 1.9x shorter episode length. This work highlights the potential of integrating reinforcement learning to enhance the performance of VLA models for real-world robotic applications.

Overview

We present a two-stage Consistency-based Reinforced Fine-Tuning (ConRFT) framework for VLA models with a unified training objective in real-world environments. By integrating offline RL with a consistency-based BC loss, we propose Cal-ConRFT which focuses on extracting an efficient policy and value function to provide a robust initialization with limited pre-collected demonstrations. During online fine-tuning, we propose HIL-ConRFT which aims at stable and quick policy improvement, incorporating with human interventions for safe exploration and sample efficiency in real-world environments.

Experiments

Policy Rollouts

Policy Robustness

With reward-driven learning, the policy demonstrates enhanced robustness to external human disturbance, ensuring more reliable task completion.

Comparison with Other Baselines

PA-RL

Both our method and PA-RL achieve high success rates. However, our approach is able to further optimize the policy for shorter episode length, leading to a more efficient policy overall.

Our method shows an emergent behavior of pulling down on the knot after hanging to confirm it is attached, thereby improve the success rate. In contrast, PA-RL fails to explore this form of new behaviors.

HIL-SERL

Time

A Showcase

After 40 minutes of online fine-tuning, the fine-tuned Octo-small model achieves a 70% success rate on Thread Needle task.

Note: This task is not included in the paper. We present it here as an additional demonstration of our method’s capabilities on VLA model fine-tuning.

Cite our paper

If you find our research helpful and would like to reference it in your work, please consider using one of the following citations, depending on the format that best suits your needs:

-

For the Arxiv version:

@article{chen2025conrft, title={ConRFT: A Reinforced Fine-tuning Method for VLA Models via Consistency Policy}, author={Chen, Yuhui and Tian, Shuai and Zhou, Yingting and Liu, Shugao and Li, Haoran, and Zhao, Dongbin}, journal={arXiv preprint arXiv:2502.05450}, year={2025} }

Contact

If you have any questions, please feel free to contact Yuhui Chen.